YOLO vs GPT: Large Language Model Surprisingly Good at Detecting Drones in VR Experiments

- ImAFUSA

- Dec 9, 2025

- 1 min read

Researchers at ImAFUSA partner ICCS-NTUA explored how well modern AI can detect drones in immersive virtual environments—where human attention and perception are studied under both rural and urban settings.

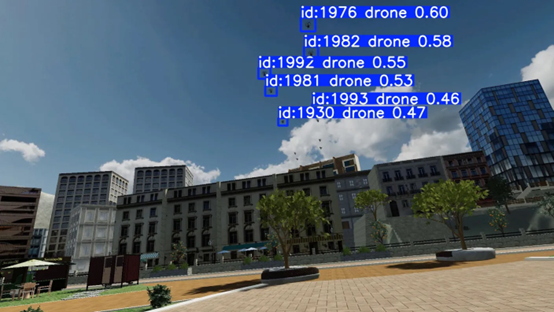

Using data from the ImAFUSA VR experiments conducted by colleagues at ISCTE, researchers from ICCS trained a custom drone detection model based on the YOLOv8 architecture.

YOLO (‘You Only Look Once’) architectures are widely used in real-time object detection tasks, making them well-suited for identifying drones in complex visual environments

Yet despite its sophistication, YOLO struggled with small, pixel-sized drones scattered in high-resolution frames. Even with high-end GPUs and advanced training techniques, including transfer learning, label refinement, adaptive resolution scaling, and real-time metric monitoring, the model tended to underestimate drone counts.

To compare, we evaluated GPT-4o's ability to estimate drone numbers from VR video frames. Surprisingly, the LLM outperformed YOLO significantly, showing better alignment with the ground truth (r = 0.91 vs 0.53).

The takeaway: While object detection models shine in many use cases, LLMs can offer a robust alternative for tasks like visual scene understanding—especially when training data is limited or objects are extremely small.

This raises exciting possibilities at the intersection of vision models and language models for future AI-driven perception systems.

ICCS's detailed study has been submitted for publication in Human Computer Interaction International (HCII) 2025.